Artificial Intelligence Without Limits: Exploring LLama.cpp

Fewer Resources, More Intelligence – LLama.cpp Redefines What’s Possible with AI Models on Your Own Computer

Continuing our series on 6 ways to run AI models locally without the need to access external APIs, today we will talk about LLama.cpp!

In recent years, the use of language models has rapidly expanded across various fields, from text processing to code generation and clinical data analysis.

One of the biggest challenges when working with these powerful models is the need for significant computational resources, especially memory and GPU.

To overcome this limitation, solutions like LLama.cpp have emerged, aiming to make robust language models more accessible on machines with limited resources.

In this post, we will build a Python application that calls a large language model (LLM) using the LLama.cpp library and answers a question.

We will cover how to download the model, install the library, and create/run the application—all on your own computer.

But first, what is LLama.cpp?

LLama.cpp (or LLaMa C++) is an optimized implementation of the LLama model architecture designed to run efficiently on machines with limited memory.

Developed by Georgi Gerganov (with over 390 collaborators), this C/C++ version provides a simplified interface and advanced features that allow language models to run without overloading the systems.

With the ability to run in resource-constrained environments, LLama.cpp makes powerful language models more accessible and practical for a variety of applications.

How Does LLama.cpp Work?

LLama.cpp is an adaptation of the original LLama architecture, optimized for lighter execution. Some of the key features that set this implementation apart include:

Memory: The main advantage of LLama.cpp is its ability to reduce memory requirements, allowing you to run large language models on your own computer.

Computational Efficiency: In addition to reducing memory usage, LLama.cpp also focuses on improving execution efficiency, utilizing specific C++ code optimizations to speed up the process.

Ease of Implementation: Despite being a lighter solution, LLama.cpp does not sacrifice the quality of the results. It maintains the ability to generate text and perform NLP tasks with high precision.

Installation

Before we get started, you will need a computer with Python installed and a C/C++ compiler.

In this tutorial, we will use WSL (Windows Subsystem for Linux), a compatibility layer developed by Microsoft that allows you to run a Linux operating system directly on Windows.

WSL allows you to run Linux distributions (such as Ubuntu, Debian, Fedora, and others) on Windows, enabling you to use command-line tools, scripts, and Linux software natively in your Windows environment.

After accessing WSL, we will need to install the necessary libraries. If you don't have the C/C++ compiler, type the following in the terminal:

sudo apt update

sudo apt install build-essentialThis will update the list of available packages and install the build-essential package, which includes compilation tools like gcc (C compiler), g++ (C++ compiler), and other libraries for building.

Next, we will export the environment variable CC to point to the gcc compiler:

export CC=$(which gcc)Now we can install the library using pip:

pip install llama-cpp-pythonGetting the Model

Now, let's download the LLM model we'll use in the application. In this tutorial, I’ll use the Phi-3 from Microsoft, in the GGUF version.

GGUF is an optimized format for running language models on devices with limited memory and computational capacity. It uses 4, 5, and 8-bit quantizations to reduce memory usage and speed up processing, making it ideal for applications that require efficiency and fast responses.

To get the model, visit: https://huggingface.co/microsoft/Phi-3-mini-4k-instruct-gguf.

Click on "Files and versions" and then click the icon to download the model “Phi-3-ni-4k-instruct-q4.gguf”.

If you're using WSL, after downloading the file, move it to the Linux directory within your Windows environment, accessible via the path:

\\wsl$\[DistroName]\homeExample:

\\wsl$\Ubuntu-22.04\home\[your_username]\workspace\modelNote: Before doing this, create the "workspace" and "model" folders in your home directory to store the model and the application.

Creating the Python Application

The next step is to create a Python application to call our library and access the Phi-3 model.

Create a file named app.py and put this code inside:

from llama_cpp import Llama

llm = Llama(

model_path="model/Phi-3-mini-4k-instruct-q4.gguf", # caminho do modelo

n_ctx=512, # Max context window size

)

prompt = "How would you explain the internet to a person from the Middle Ages"

# Inferência

output = llm(

f"<|user|>\n{prompt}<|end|>\n<|assistant|>",

max_tokens=256, # Responses up to 256 tokens

stop=["<|end|>"],

echo=True,

)

print(output['choices'][0]['text'])In this code, we perform the following steps:

Import the

Llama_cpplibraryLoad the model

Define the prompt (question)

Run inference (generate the response from the model)

Display the response

Note: For more details on the available parameters, visit the model page: https://huggingface.co/microsoft/Phi-3-mini-4k-instruct-gguf

Running the Application

To run the Python application, navigate to the folder where the application was created (for example, "workspace") in the terminal and execute the command:

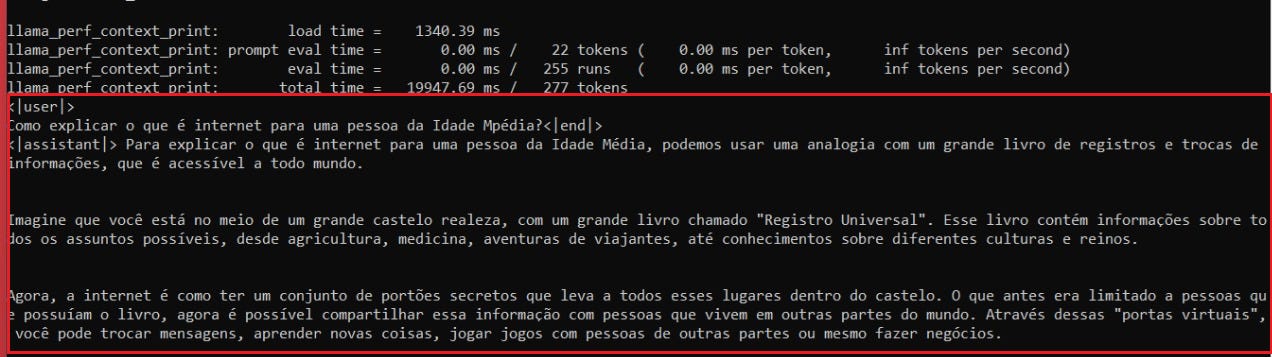

python app.pyThe output will be the response to our question inside the <|assistant|> tag:

For me, the response to the question "How would you explain the internet to a person from the Middle Ages?" came out like this:

<|assistant|> To explain the internet to a person from the Middle Ages, we can use an analogy of a large book of records and exchanges of information, accessible to everyone.

Imagine you are in the middle of a great royal castle with a huge book called the "Universal Registry." This book contains information on all possible subjects, from agriculture, medicine, adventurers' tales, to knowledge of different cultures and kingdoms.

Now, the internet is like having a set of secret gates that leads to all these places within the castle. What used to be limited to people who had the book, is now possible to share this information with people living in different parts of the world. Through these "virtual doors," you can exchange messages, learn new things, play games with people from other parts, or even do business.Conclusions

This application is a small example of how to use LLama_cpp to call a model and generate responses based on a specific prompt, but it can easily be adapted for other tasks and applications.

The LLama_cpp library can be used in a wide range of applications, such as virtual assistants, text analysis, or explanations in various contexts.

With it, we can work with powerful language models without the need for large computational resources, making it ideal for projects that require efficiency and scalability on machines with limited capacity.

Let us know in the comments what the output of your model was!

Hope you enjoyed the post. See you next time! 💗