MCP: Anthropic's Open Protocol That Is Connecting AIs to the Real World

Generative AI, typically represented by large language models (LLMs), is incredibly powerful.

However, one of the biggest challenges these models face is interacting with the real world: accessing up-to-date data, integrating with specific tools, and connecting to enterprise systems. Imagine a genius trapped in a library. He knows a lot but has no way to follow the latest news or manipulate tools to perform tasks in the present.

This is where the Model Context Protocol (MCP) comes in—an open-source initiative launched by Anthropic (the creator of Claude) that promises to be a game-changer. MCP aims to create a universal standard for securely and efficiently connecting AI models to various data sources and tools.

In today’s article, we’ll explore what MCP is, how it works, its practical applications, and its impact on the evolution of AI.

The Problem: AI’s Contextual Limitations

Current LLMs generally operate in isolation because they are trained on massive volumes of text, but this knowledge is static. They lack direct access to:

Real-time data: Ask a standard LLM, "What’s today’s date?", and it will likely get it wrong, relying on the last date from its training data.

Private databases: On their own, LLMs cannot directly access CRM records, check inventory, or analyze business documentation.

External tools: Connecting an LLM to APIs, internal systems, and databases has always required custom, complex, and difficult-to-maintain solutions.

This lack of connectivity creates data silos and severely limits AI’s ability to provide truly relevant, up-to-date, and useful responses for real-world tasks. Every integration becomes a separate project, making scalability expensive and time-consuming.

The Solution: Introducing the Model Context Protocol (MCP)

MCP emerges as an elegant and standardized solution to this challenge. Think of it as the "USB-C of AI integrations"—a universal connector that allows different AI models (the "devices") to connect to a wide range of data sources and tools (the "peripherals") using a single standard.

What MCP Does:

Defines an open standard: Establishes a common language for communication between AI and external sources.

Simplifies integration: Reduces the need for multiple custom-built solutions.

Facilitates bidirectional communication: Allows AIs to both consume and send data to external systems using MCP Servers and MCP Clients.

Focuses on security: Designed for secure transmission and access control.

By adopting MCP, developers can build more robust, reliable AI systems that are, most importantly, better connected to the context they need to operate efficiently.

How It Works: The MCP Architecture

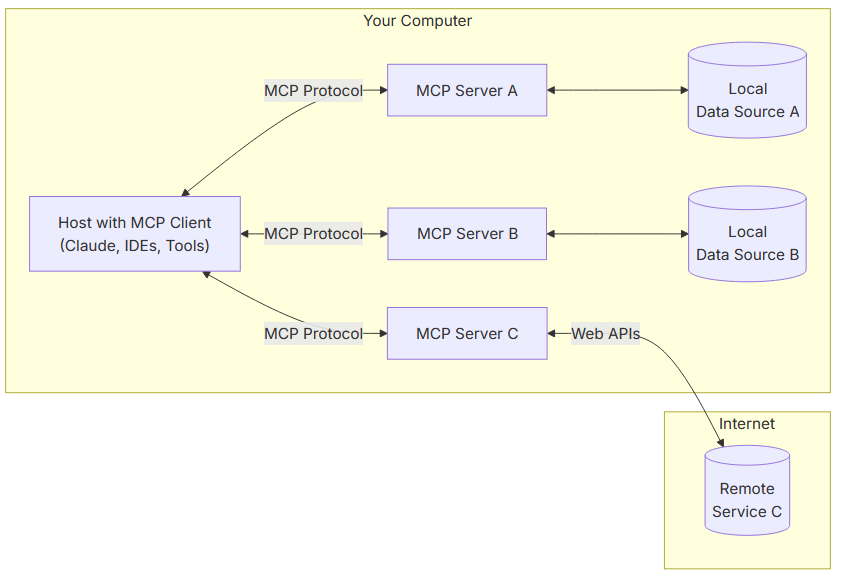

The MCP structure follows a client-server model, where an application (host) can connect to multiple servers:

Hosts (Client Applications): These are the applications that use AI, such as assistants, IDEs, and chatbots. They contain an MCP Client, which manages communication. The host initiates data requests or actions.

MCP Servers: These are lightweight, standalone programs that act as bridges. Each server exposes data or functionalities from a specific source (a database, an API, a file system, etc.) through the MCP protocol. They run securely, often locally, controlling access to resources.

Resources (Data and Tools): These include data (files, database schemas, API results) or tools (executing SQL queries, accessing external APIs, file manipulation) that MCP Servers make available to AI.

Operation Flow

A user asks a question or gives a command to the host application (e.g., "List products priced below R$50 in my database.").

The MCP Client communicates with the MCP Server using the standardized protocol.

The MCP Server accesses the local or remote resource (e.g., executes the query in a local SQLite database).

The MCP Server returns the information (or action result) to the MCP Client.

The Host receives the information and uses it to generate the final response for the user.

Many MCP Servers run locally, ensuring privacy and security. For example, in a scenario where a local database is accessed, sensitive data never leaves the user's machine.

Key Advantages and Features of MCP

✅ Open and Free Standard – Open-source protocol and SDKs (Python, TypeScript, C#), encouraging community adoption and contribution.

✅ Reusability and Modularity – An MCP Server built for a data source (e.g., GitHub) can be used by any MCP-compatible host application.

✅ Flexibility – Allows switching between different LLM providers without rebuilding all data/tool integrations.

✅ Security – Designed to expose functionalities in a controlled way, with future authentication specifications (OAuth 2.0).

✅ Enhanced Context Management – MCP is not just about tools; it also provides a framework for managing conversational context more efficiently, helping to handle limited context windows and maintain longer, more coherent interactions.

✅ Growing Ecosystem – There is already a growing list of community-maintained MCP Servers.

Use Cases for MCP

Enterprise and Corporate Tools

Companies like Block and Apollo use MCP to connect internal systems.

Integration with tools like Slack, Google Drive, and Notion is already available.

Software Development

Companies like Zed, Replit, and Sourcegraph use MCP for contextual access to code repositories.

AI can access technical documentation and suggest improvements in real time.

AI Agents

Connecting AI to databases, enabling complex queries in natural language.

Accessing dynamic information such as weather forecasts and live events.

How to Get Started with MCP

Explore Pre-Built Servers: Anthropic and the community have already made servers available for Google Drive, Slack, GitHub, SQLite, and more. Lists can be found on platforms like MCP.so and Smithery.

Try Host Applications: Claude Desktop (currently in closed beta) is an example of an application that supports MCP locally.

Use the SDKs: Official SDKs in Python, TypeScript, and C# are available for building your own servers or integrating MCP Clients into your applications.

Contribute to the Project: As an open-source project, the community can create new servers and expand its functionalities.

Integration with Tools like LangChain: Utilities already exist to facilitate the use of MCP Servers within popular frameworks like LangChain.

Conclusion

The Model Context Protocol (MCP) is more than just a technical specification—it's a vision for a future where artificial intelligence interacts with the digital world seamlessly, securely, and in a standardized way.

By breaking down data silos and simplifying integration with tools, MCP has the potential to unlock new capabilities and make AI truly context-aware and more useful than ever.

As adoption grows, we can expect:

A Rich Ecosystem – An explosion of MCP Servers available for all kinds of data and tools.

Standardized Remote Connections – The evolution of the protocol to include discovery and secure connection to remote MCP Servers (via HTTP/SSE), enabling scenarios where web services directly publish their functionalities via MCP.

More Powerful AI Agents – The potential for "Meta Agents" (AIs that build other AIs) or "Self-Evolving Agents", which discover and integrate new MCP tools autonomously as needed—a fascinating (and perhaps slightly eerie) future!

We encourage developers, businesses, and AI enthusiasts to explore MCP, experiment with its possibilities, and contribute to this growing open-source ecosystem.

🚀 The future of connected AI is being built now.