Nanochat: A ChatGPT Clone for Just $100

Andrej Karpathy, a heavyweight name in the AI world, has just launched nanochat, an open-source repository that promises to democratize the development of ChatGPT-style chatbots.

The proposal is ambitious: with an investment of approximately $100 and about four hours of training on an 8-GPU H100 node, any developer or enthusiast can train their own conversational AI model!

Unlike previous projects that focused on isolated steps, nanochat offers a complete and minimalist solution, covering the entire lifecycle of a language model, from tokenization to inference and the web interface, with full transparency.

Follow our page on LinkedIn for more content like this! 😉

What is nanochat?

nanochat is the evolution of nanoGPT, also launched by Karpathy.

While nanoGPT focused exclusively on the pre-training of models, nanochat expands this vision to include all the necessary stages to create a functional chatbot.

All of this is contained in a lean codebase of approximately 8,000 lines, designed to be “hackable,” modular, and easy to understand.

The philosophy behind nanochat is to provide a solid foundation and a clear starting point for anyone who wants to learn, research, or experiment with LLMs.

Key Features

End-to-End Solution: The repository covers the creation of a custom tokenizer in Rust, pre-training, intermediate training, supervised fine-tuning (SFT), reinforcement learning (RL), and an inference engine.

Surprising Cost-Effectiveness: The main attraction is the ability to train a functional model for around $100. For those willing to invest more, training for approximately $1,000 (about 41 hours) results in an enhanced model capable of handling math, programming, and reasoning tasks.

Minimalist Codebase: With few dependencies and clean code, Nanochat invites the community to explore, modify, and build on its foundations.

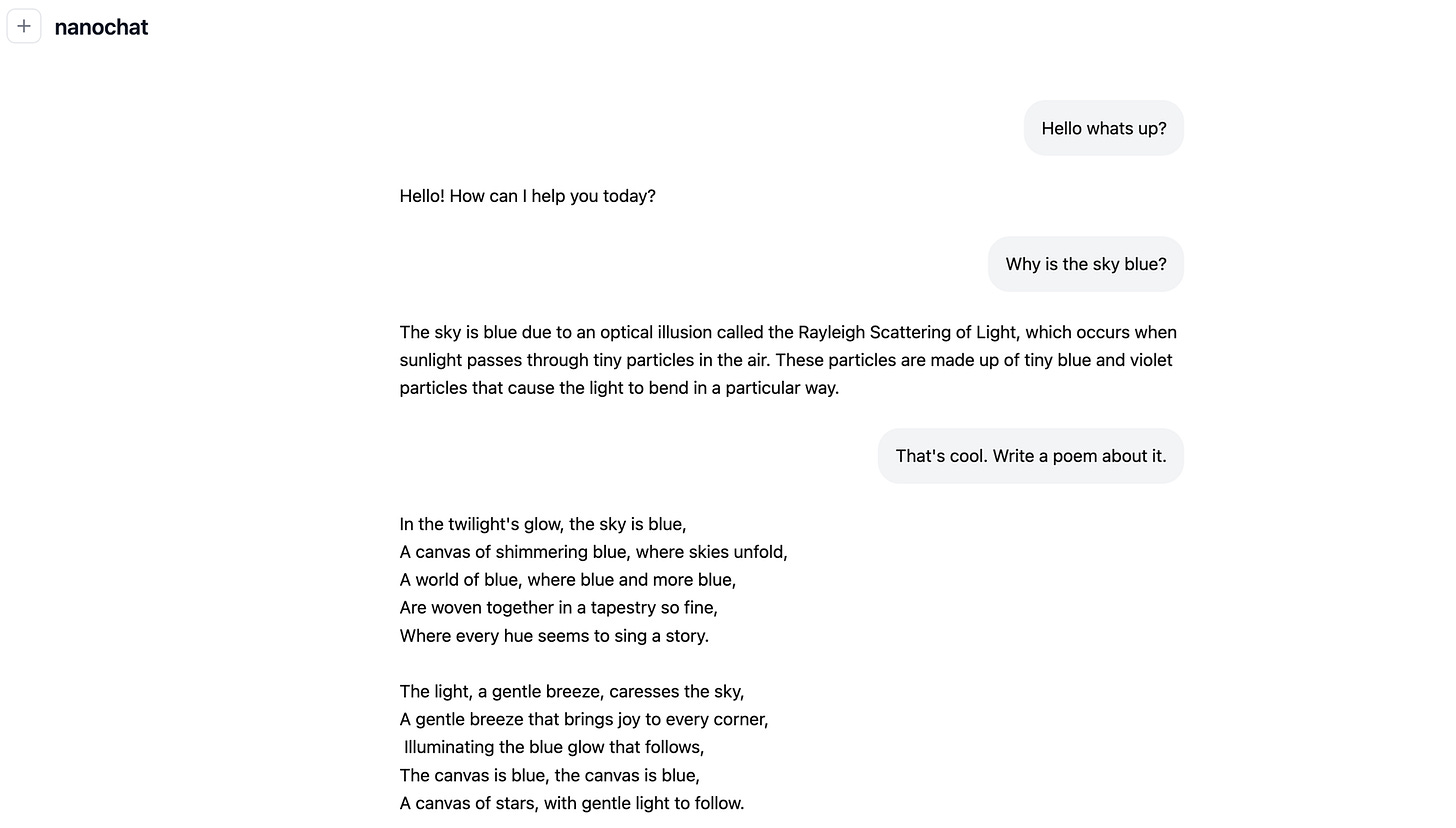

Accessible Interfaces: Interact with the trained model through a command-line interface (CLI) or a web interface that emulates the ChatGPT experience.

Automated Reports: At the end of each training run, a markdown report is generated, summarizing evaluation metrics and the model’s progress through the different training stages.

How Does It Work?

nanochat employs a Llama-type Transformer architecture with optimizations such as Rotary Embeddings (RoPE) and QK Normalization. The training process is structured in several stages:

Tokenization: A custom Rust-based tokenizer is trained from scratch.

Pre-training: The model is initially trained on a large web dataset, such as FineWeb-EDU.

Intermediate Training: In this phase, the model is exposed to more specific data, including conversations, multiple-choice questions, and math problems to enhance its reasoning and tool-usage capabilities.

Supervised Fine-Tuning (SFT): The model is refined with conversational data to adapt its behavior to that of a chat assistant.

Reinforcement Learning (RL): Optionally, reinforcement learning can be applied to further optimize performance on specific tasks, such as math problems.

Inference: The custom inference engine supports KV caching for efficient text generation and includes a Python sandbox for using tools like a calculator.

Performance and Scalability

Nanochat’s results scale according to investment in time and computation:

$100 (~4 hours): Produces a model with basic conversational abilities, comparable to a “preschool-aged child,” according to Karpathy. Suitable for educational experiments and rapid prototyping.

$300 (~12 hours): Achieves performance equivalent to GPT-2 on CORE metrics, with enough coherence for simple practical applications.

$1,000 (~41 hours): The model demonstrates coherence in math and programming problems, approaching the behavior of larger models like GPT-3-small.

How to Get Started with Nanochat?

For those interested in training their own model, the process is straightforward:

Clone the Repository:

git clone https://github.com/karpathy/nanochatSet Up the Environment: Provision a cloud server with GPUs (for example, 8x H100 for the $100 “speedrun”).

Run the Script: The

speedrun.shscript automates the entire pipeline, from downloading the data to the final evaluation.Interact with the Model: After training, launch the web interface or CLI (command-line interface) to chat with your creation.

Conclusion

Andrej Karpathy’s nanochat is more than just a code repository: it is an invitation for the community to explore the depths of language model training in a way that was previously inaccessible to most.

By drastically reducing cost and complexity, this project has the potential to accelerate innovation and learning across the artificial intelligence ecosystem.

The goal is precisely to provide a “strong baseline” so developers can fully understand, confidently modify, and adapt it to specific needs.

The code is available on GitHub under an open license, ready to be studied, modified, and improved by the community!