Agents with LangChain: When AI Needs to Make Decisions

Part 4 of the series "Everything You Need to Know About LangChain (Before Starting an AI Project)"

Welcome to the fourth post in our LangChain series!

In the previous posts, we explored how to integrate various LLMs, implement RAG (Retrieval-Augmented Generation) systems, and add conversational memory to your chatbot.

Today, we’ll dive into a very interesting topic: AI agents!

Imagine you're chatting with an assistant that not only answers your questions but also decides which tools to use to help you.

Need up-to-date information? It searches the web. Want to perform complex calculations? It grabs the calculator. Need to query a database? It accesses it and brings back the results.

That’s exactly what agents in LangChain do — they turn your AI from a simple responder into a true problem-solver.

Follow our page on LinkedIn for more content like this! 😉

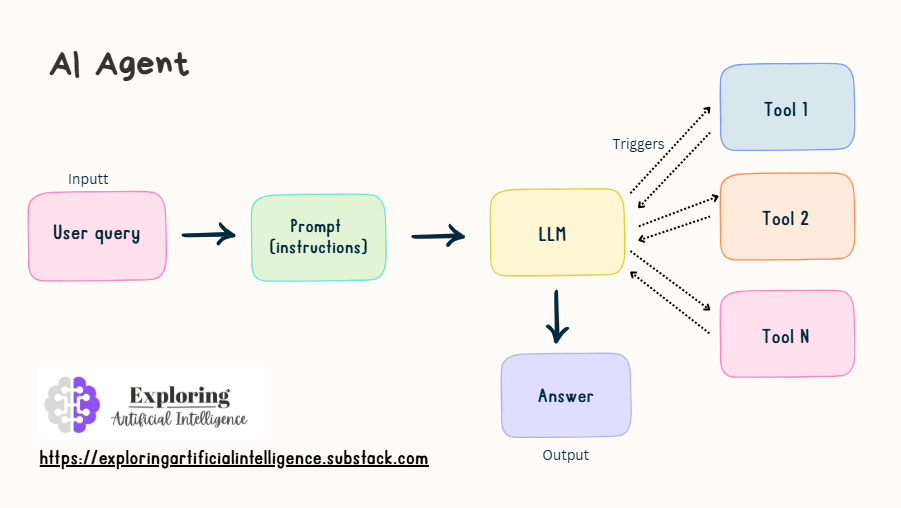

What Are AI Agents?

An agent is like an intelligent coordinator that has access to a set of tools and autonomously decides which one to use in each situation. Unlike a traditional chain where we define a fixed flow, the agent analyzes our question and dynamically chooses the best path to reach the answer.

The “magic” happens when the agent enters a reasoning loop: it thinks about the problem, selects a tool (from the available ones), performs the action, evaluates the result, and decides whether it needs to do anything else or if it can already give you the final answer.

Agent Architecture

The agent is made up of three main components:

Tools are the capabilities we provide the agent — web search, calculator, API access, database queries, Python code execution, or any custom function we create.

The Brain is the language model (LLM) that analyzes the user’s question and decides which tools to use. Here we can use GPT-4, Claude, or any LLM that supports the necessary reasoning.

The Strategy is the type of agent that defines how it will approach problems. Some are more direct, others more reflective, and some specialize in specific types of tasks.

A Practical Example

To illustrate how this works in practice, let’s create a simple agent with LangChain that can fetch current information from the web using DuckDuckGo.

DuckDuckGo is a search engine focused on user privacy. Unlike other popular search engines, it does not collect, share, or store personal information or browsing history.

from langchain_google_genai import ChatGoogleGenerativeAI

from langchain.agents import initialize_agent, AgentType, load_tools

import os

# Set your GOOGLE_API_KEY

os.environ["GOOGLE_API_KEY"] = "YOUR API KEY"

# Initialize the Gemini model

llm = ChatGoogleGenerativeAI(model="models/gemini-1.5-flash", temperature=0.3)

# Load tools, in this case, the search engine

tools = load_tools(["ddg-search"], llm=llm)

# Create the agent with the tools and the Gemini model

agent = initialize_agent(

tools,

llm,

agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION,

verbose=True,

max_iterations=3

)

# Run the agent with a question

question = "What is the capital of Iceland and what is its current population?"

answer = agent.run(question)When you run this code, the agent will:

Analyze the question and realize it needs current information

Decide to use the DuckDuckGo search tool

Look up information about the capital of Iceland

Process the results and identify that it is Reykjavik

Search again if necessary to find population data

Compile a complete answer with both pieces of information

The parameter verbose=True lets us see this entire reasoning process happening in real time - it’s really cool to watch how the agent "thinks" through each step!

🚀 Complete code + practical examples are available in Colab Notebooks! Find "LangChain-agents.ipynb" and try creating your own agents in minutes.

When to Use Agents?

Agents are recommended when you need flexibility and dynamic decision-making. If your application needs to handle varied questions that might require different types of information or processing, an agent is the right choice.

They’re great for building personal assistants, customer service systems that need to access multiple data sources, or any application where you can’t predict exactly what workflow will be needed.

On the other hand, if you have a well-defined, always-the-same process, a traditional chain might be more efficient and predictable.

The Power of Custom Tools

One major advantage of agents is that you can create your own tools. Need it to query an internal CRM? Just create a tool for that. Want it to process specific company files? Another custom tool.

These tools can be anything from simple Python functions to complex integrations with external APIs. The key is to clearly define what each tool does and when it should be used.

Important Considerations

Working with agents requires some caution. They are less predictable than traditional chains — sometimes they might take unexpected routes or use more tokens than we’d like.

It’s crucial to implement safety controls, especially if the tools can execute code or access sensitive systems. Always set clear boundaries on what the agent can and cannot do.

Monitoring is also important. Since agents make decisions autonomously, we need to track their actions to understand how they are solving problems and identify possible improvements.

The Future of Agents

Agents represent an important step toward more autonomous and capable AI systems. As LLMs become more powerful and tools more sophisticated, we’ll see agents able to perform increasingly complex tasks with minimal human supervision.

Pro tip: Start simple with a basic agent and a few fundamental tools. As you understand its behavior better, gradually add more capabilities. ✨ See more agent examples here.

This is the fourth post in the LangChain series. Check out the previous posts about LLM integration, RAG implementation, chatbot memory, and keep following for a full view of the possibilities of this powerful framework.