Create Your Own Search System with EmbeddingGemma, SQLite, and Ollama

In last week’s article, we explored EmbeddingGemma, a new high-performance embedding model developed by Google and specifically designed for on-device applications.

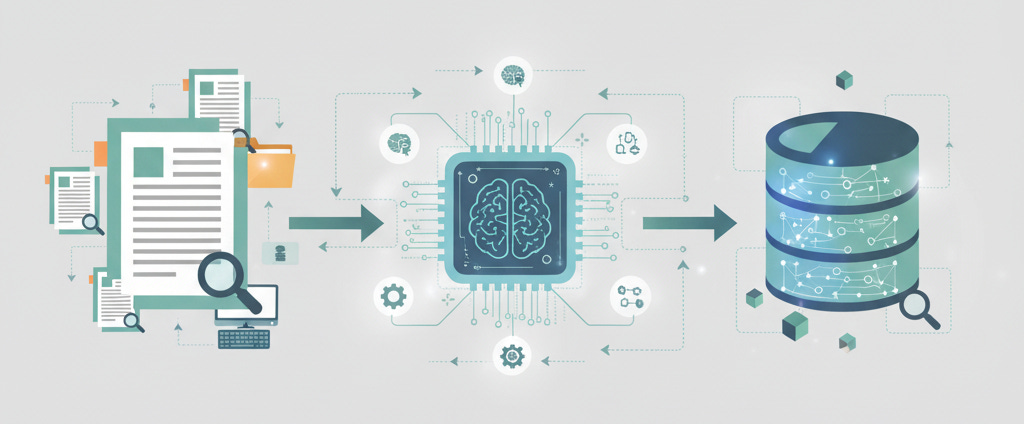

Today, we’ll see how to create a local semantic search system using open and accessible tools. The idea is to show how the integration of the EmbeddingGemma model, the SQLite database, and the Ollama platform can be powerful for personal projects.

What is EmbeddingGemma?

To recap, EmbeddingGemma is a text embedding model developed by Google. Basically, it takes a sentence, a word, or a paragraph and transforms it into a list of numbers (a vector).

These numbers represent the meaning of the text. This means that texts with similar meanings will have “close” vectors in mathematical space, allowing you to find information based on context rather than just keywords.

Why Use SQLite?

SQLite is a relational database that stands out for being lightweight and not requiring a separate server. It’s perfect for projects that need simple yet robust data storage. Its big advantage is the ability to be used as a single file, making portability and setup extremely easy.

For our search system, it will store the vectors (embeddings) generated by EmbeddingGemma and the original texts, functioning as our knowledge repository.

And Ollama?

Ollama is a tool that simplifies the use of language models (LLMs) locally, allowing us to download, run, and interact with models easily using a single command. It handles all the technical complexity, ensuring we can focus on what really matters: our application.

What Does Each Component Do?

In our application, we will:

Generate embeddings locally with EmbeddingGemma via Ollama

Store each embedding in a SQLite database

Query our database using similarity search

Coding

Our semantic search script is based on this script, which we adapted to work in a pizzeria context.

🚀 To access the full, ready-to-use code for Colab, visit our Notebooks section. Look for EmbeddingGemma-BuscaSemantica-sqlite-ollama.ipynb.

The first step is to run Ollama on your computer (see instructions here). After running Ollama, download the model with the command:

ollama pull embeddinggemma:latest

Next, install the other required libraries:

pip install sqlite-vec numpyNow, create a file named embeddinggemma-sqlite-ollama.py and paste the following content:

Keep reading with a 7-day free trial

Subscribe to Exploring Artificial Intelligence to keep reading this post and get 7 days of free access to the full post archives.