Last week, we saw how artificial neural networks work and how they are inspired by the functioning of the human brain.

We explored the basic structure of an artificial neuron, discussed the main types of layers, and understood how learning occurs through the adjustment of weights.

This week, we will address deep neural networks, also known as deep learning.

Deep learning consist of multiple layers of artificial neurons, allowing models to learn complex representations from data. This type of approach is widely used in tasks such as image recognition, natural language processing (NLP), and autonomous decision-making.

In this article, we will explore what deep learning is, how it works, and some of its applications.

What is Deep Learning?

Deep learning is a subfield of machine learning that involves using deep neural networks to analyze large volumes of data. Neural networks are inspired by the way the human brain processes information, allowing algorithms to learn and adapt from large datasets without direct human intervention.

One of the main advantages of deep learning is its ability to learn from large volumes of data, automatically identifying patterns and features. In most cases, this eliminates the need for human intervention in feature engineering, making the process more efficient and adaptable.

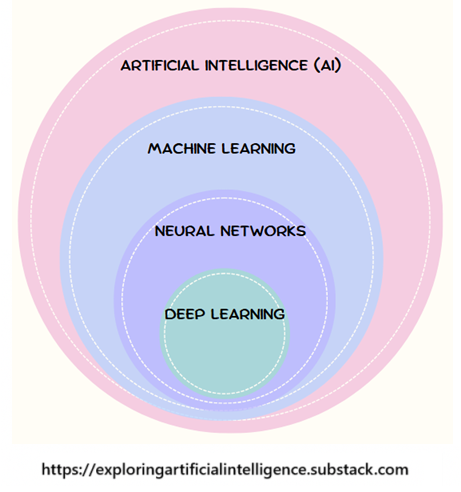

The image above shows the hierarchy between Artificial Intelligence, Machine Learning, Neural Networks, and Deep Learning.

As we can see, AI is the broadest concept, encompassing any system that mimics human intelligence. Within it, machine learning allows computers to learn patterns from data without explicit rules. Neural networks represent one of the many machine learning algorithms, with models inspired by the human brain, composed of interconnected artificial neurons capable of identifying complex patterns. Finally, deep learning is a specialization of neural networks, characterized by the use of multiple layers of artificial neurons, enabling the automatic extraction of features and learning complex representations from large volumes of data.

How Does Deep Learning Work?

Deep learning uses artificial neural networks composed of multiple layers (hence the term "deep"). Each layer of the network processes different aspects of the data, allowing the system to identify complex patterns. Through training with large volumes of data, the network learns to perform complex tasks such as image recognition.

Deep learning utilizes deep neural networks, composed of multiple layers of interconnected artificial neurons, to process and interpret data in a hierarchical manner.

Architecture

As we saw in the article about Neural Networks, deep neural networks are made up of three main types of layers:

Input layer: receives raw data, such as image pixels or words in a text.

Hidden layers: process the information, extracting patterns at different levels of abstraction. Each neuron in these layers applies a mathematical transformation (such as a linear combination followed by an activation function) and passes the results to the next layer.

Output layer: generates the final prediction, such as image classification or a chatbot response.

Training

The model learns by adjusting the weights of the connections between neurons through a process called backpropagation, which uses gradient descent to minimize the prediction error.

Forward pass: data passes through the network layer by layer, going through activation functions (ReLU, Sigmoid, Softmax, etc.).

Error calculation: the model's output is compared with the real value using a loss function (such as cross-entropy for classification or MSE for regression).

Backward pass (backpropagation): errors are propagated backward through the network, adjusting the weights based on gradient descent and algorithms like Adam or RMSprop.

Importance of Depth

The depth of the networks allows for the modeling of abstract representations, making deep learning effective for tasks such as facial recognition, machine translation, and image generation. Deeper networks can learn more sophisticated patterns, but they require more data and computational power to avoid issues like overfitting and exploding/vanishing gradients.

This approach has driven advances in AI, making deep learning one of the cornerstones of modern artificial intelligence.

Difference Between Deep Learning and Neural Networks

All deep neural networks are neural networks, but not all neural networks are deep learning. Deep learning specifically refers to the use of neural networks with many layers to solve more complex problems with large amounts of data.

Neural Networks:

Definition: These are machine learning models inspired by the way the human brain works, consisting of layers of interconnected artificial neurons.

Structure: Typically, neural networks can be shallow (with few layers) and simpler, like single-hidden-layer perceptron networks, and are applied to relatively simple tasks.

Example: A simple neural network can be used to predict a variable from a dataset.

Deep Learning:

Definition: It is a subfield within neural networks that focuses on training neural networks with multiple hidden layers, known as deep neural networks.

Structure: Deep neural networks have several layers (such as dozens or hundreds), allowing the model to learn hierarchical and complex representations of data, capturing more subtle patterns and abstractions in large, complex datasets.

Example: Convolutional neural networks (CNNs) for computer vision, or recurrent neural networks (RNNs) for natural language processing.

Main Types of Deep Learning

The main types of deep learning are defined by the architecture of neural networks and the type of problem they are designed to solve. Here are the main ones:

Convolutional Neural Networks (CNNs): Primarily used in computer vision tasks, such as image recognition.

Recurrent Neural Networks (RNNs): Ideal for processing sequential data, such as time series or text.

Long Short-Term Memory (LSTM): A variant of RNNs, designed to solve gradient explosion and vanishing problems, allowing the network to "remember" information for longer periods, being highly effective in tasks such as machine translation, text generation, and time series analysis.

Generative Adversarial Networks (GANs): Used to generate new data, such as images, music, or even text.

Autoencoders: Networks used for data compression and dimensionality reduction. They consist of two parts: the encoder, which maps input data to a latent space, and the decoder, which reconstructs the data from that space.

Transformers: Architectures focused primarily on natural language processing (NLP), such as machine translation, summarization, and question answering. The main innovation of transformers is the attention mechanism, which allows the model to focus on specific parts of the input, even in long data sequences.

Deep Learning Applications

Deep learning has been widely applied across various sectors due to its ability to handle large volumes of data and learn complex representations. Some of the main applications of deep learning include:

Computer Vision: Facial recognition, medical image diagnosis, and object detection in videos.

Natural Language Processing: Machine translation, chatbots, and virtual assistants, such as GPT-4.

Autonomous Vehicles: Self-driving cars and drones that use deep learning to navigate the real world.

Healthcare: Disease diagnosis and prediction, genetic analysis, and optimization of personalized treatments.

Conclusion

Deep learning has transformed various fields of knowledge and technology, providing innovative and powerful solutions to complex problems. Its ability to handle large volumes of data, learn high-level representations, and perform tasks with precision and autonomy has enabled notable advances in fields like computer vision, natural language processing, healthcare, industrial automation, and the financial industry.

However, despite its vast applications and impressive performance in various scenarios, deep learning still faces some challenges. The need for large volumes of labeled data for training, high computational cost, and difficulty in interpreting models are issues that need to be addressed to enable widespread and effective adoption in new contexts. Additionally, the reliance on large amounts of data may introduce biases, leading to unexpected or unethical results in some applications.

Despite these challenges, deep learning is at the core of the current technological revolution, with its impact still expanding into new areas. As these challenges are overcome, the technology has the potential to further transform sectors, providing faster, more precise, and personalized solutions. The continuous evolution of neural network architectures and the expansion of their applications indicate a promising future, offering new opportunities for researchers, businesses, and society.

Did you like this article? Share it with your colleagues!

If you have any questions or suggestions, leave your comment below!