Neural Networks: AI Inspired by the Human Brain

Just like neurons in the brain, neural networks learn from experience, transforming data into knowledge.

Continuing our series on the Top 8 Machine Learning Algorithms, today we dive into the fascinating world of neural networks!

Artificial neural networks are one of the pillars of modern artificial intelligence. Inspired by the workings of the human brain, these networks have revolutionized the way computers learn and make decisions.

From image recognition to text generation, neural networks are everywhere. In this article, we’ll explore how they work, their key applications, and how to get started with them.

What Are Neural Networks?

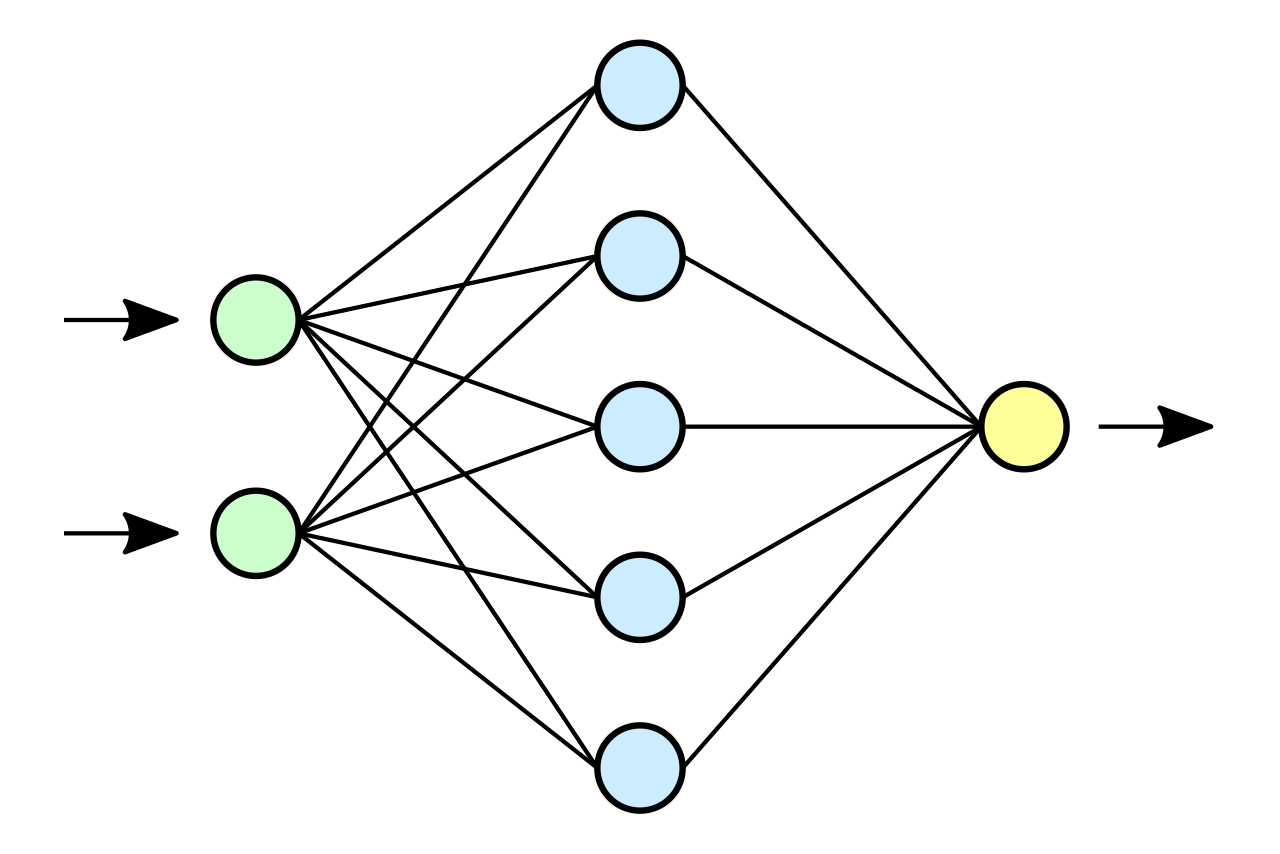

A neural network is a mathematical model composed of units called artificial neurons, organized into layers.

Each neuron performs simple calculations and transmits information to other neurons in subsequent layers.

A neural network can have multiple hidden layers, making it deeper and more capable of identifying complex patterns.

In general, a neural network consists of:

Input Layer: Receives raw data.

Hidden Layers: Perform intermediate computations and extract patterns.

Output Layer: Provides the final model result.

How Neural Networks Work

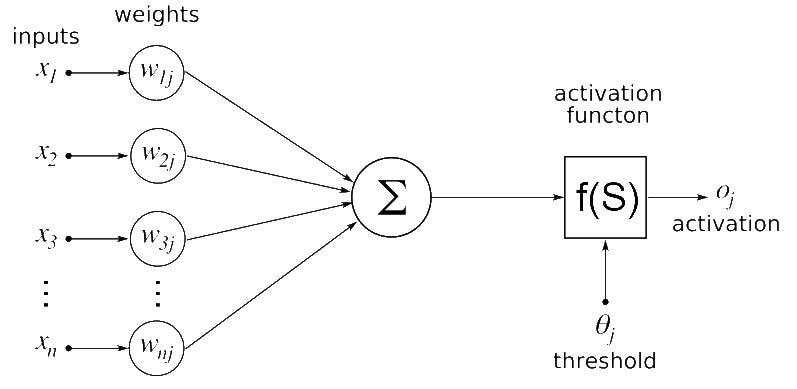

The image below represents an artificial neuron:

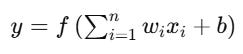

Each neuron in a neural network receives a set of weighted inputs, sums these inputs, and applies an activation function to determine its output. Mathematically, we can express it using the formula:

Where:

xᵢ are the inputs,

wᵢ are the weights associated with each input (what the model learns during training),

b is the bias,

f is the activation function,

y is the output of the neuron.

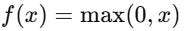

The activation function introduces non-linearity to the model, enabling it to learn complex patterns. Some popular activation functions are:

ReLU (Rectified Linear Unit):

Sigmoid:

Hyperbolic Tangent (tanh):

The output of the neuron's activation function determines whether it will be activated and influences the neurons in the following layer. If the output is high enough (for example, above a threshold in functions like ReLU or sigmoid), the neuron sends a strong signal to the next layer. Otherwise, it might have an insignificant effect or even be inhibited, depending on the activation function used. This mechanism allows the network to automatically select which neurons should contribute more to the final result, helping capture relevant features from the input data.

Neural Network Training

The learning process of a neural network involves adjusting the weights (the w variable) to minimize the prediction error. This is done through:

Forward Propagation: The data passes through the network, generating a prediction.

Error Calculation: The prediction is compared to the actual value using a cost function, such as mean squared error or cross-entropy.

Backpropagation: The error is propagated back through the network to adjust the weights using the gradient descent algorithm.

Weight Update: The weights are modified to reduce the error in the next iteration.

This cycle repeats until the network learns to correctly map the inputs to the desired outputs.

Inference

After the neural network is trained, it can be used to make predictions on new data. This process is called inference. During inference, the weights of the network have already been adjusted and remain fixed.

The input passes through the network in the same way as during training, but without the need for error backpropagation or weight updates.

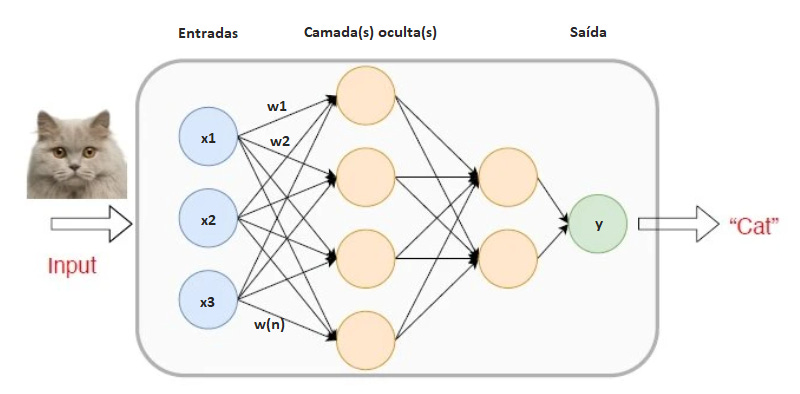

See the image below:

This image represents an artificial neural network model trained for the task of animal classification based on their characteristics. To simplify, let's assume we're working with a binary classifier, classifying animals between the classes "cat" and "dog."

The inference works as follows:

Input Layer:

The blue nodes in the image represent the values of the features extracted from the animal we want to classify, converted into numerical attributes.

These attributes are represented by {x₁, x₂, x₃, ..., xₙ}, where, for example, x₁ could be the weight, x₂ the height, and x₃ the tail size.

Hidden Layers:

The orange nodes represent the neurons in the hidden layers.

Each input attribute (xᵢ) is multiplied by the weights adjusted during training (wᵢ), and the network performs a weighted sum of these inputs.

Then, each neuron applies an activation function f, generating a new value. This process determines which neurons will be activated in the next layer, repeating until reaching the final output.

Output Layer:

The green node represents the final output, where the model predicts the label based on the calculations of the hidden layers.

In the example of the image, the final output is "Cat," indicating that the neural network correctly classified the animal based on the analyzed characteristics.

Applications of Neural Networks

Neural networks are present in various fields, such as:

Natural Language Processing (NLP): Machine translation, chatbots, and virtual assistants.

Computer Vision: Medical image diagnostics, facial recognition, and object detection.

Finance: Fraud analysis, market forecasting, and investment optimization.

Health Sciences: Drug discovery, automated diagnostics, and personalized medicine.

Conclusion

Neural networks have revolutionized artificial intelligence, enabling impressive advancements in various fields.

Whether for academic research, product development, or exploring new ideas, learning about neural networks is an essential step for anyone interested in AI.

In the upcoming chapters, we will explore Deep Learning and also look at a practical example of a neural network.

Stay tuned!! 🚀