Practical guide to run Gemma 3 270M on your cellphone

If you keep up with the latest in AI, you’ve probably heard of the Gemma model family, developed by Google DeepMind (see more about Gemma-3 here).

These models were designed to balance performance and efficiency, enabling developers and researchers to build practical solutions without relying solely on large infrastructures.

In recent months, this family has grown rapidly, bringing major updates such as robust versions for servers, optimized variants for healthcare, and even multimodal models.

Now, the latest release is Gemma 3 270M, an ultracompact model with 270 million parameters, designed to be fast, inexpensive to run, and easy to specialize for specific tasks.

And the best part: you can even run the model on your phone!

What makes Gemma 3 270M special?

Imagine having a powerful AI assistant that uses only 0.75% of your phone’s battery for 25 full conversations (according to Google’s internal tests on a Pixel 9 Pro)! Advantages:

✅ Privacy: everything runs locally, with no need for cloud or internet.

✅ Speed: almost instant responses, with no network latency.

✅ Efficiency: a small model, ideal for tasks like text classification, entity extraction, sentiment analysis, or even creative generation.

✅ Specialization: easy to adapt (fine-tuning) to create custom models that are cheap and fast to train.

Gemma 3 270M represents a paradigm shift: we don’t always need large, heavy models. Just like we don’t use a sledgehammer to hang a picture, we don’t need trillion-parameter models for every task.

How to get started?

You can try the model on several popular platforms:

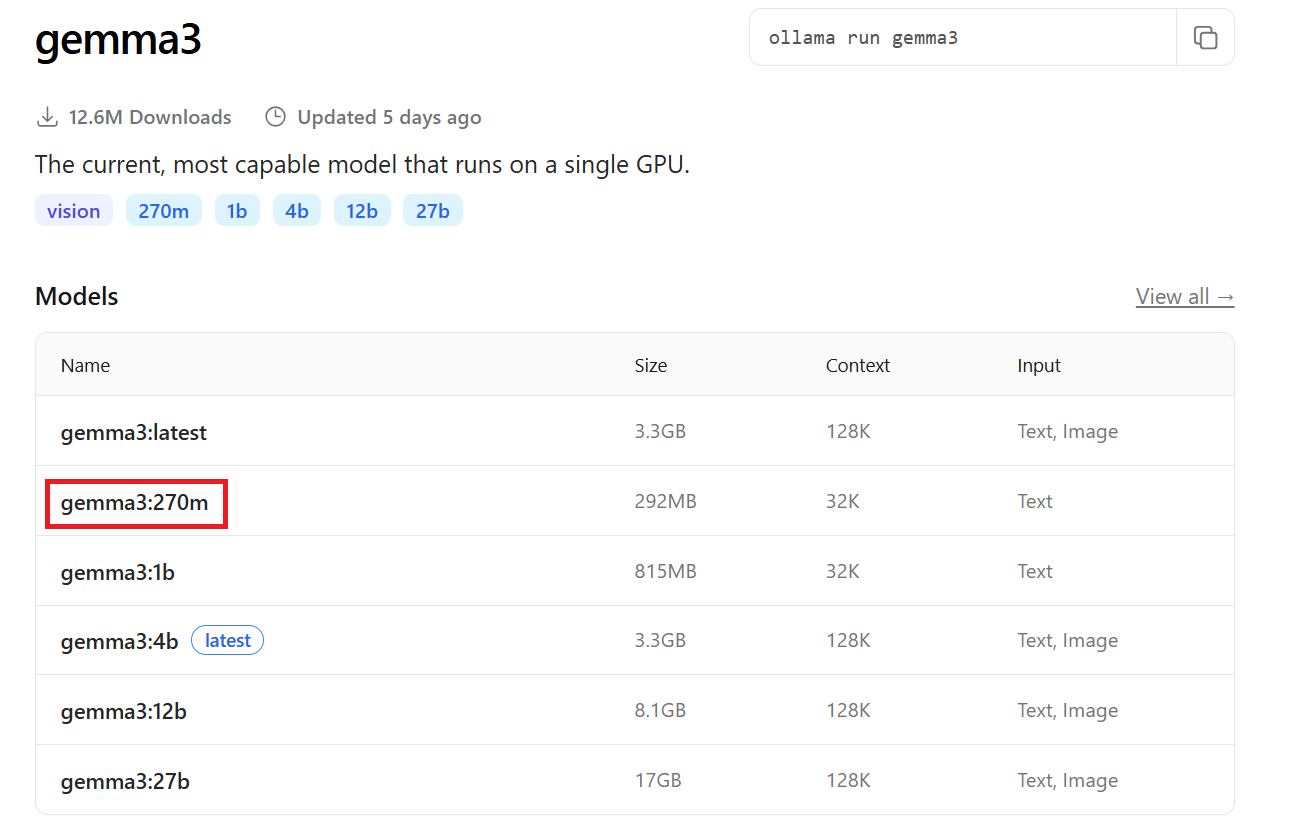

Ollama → to run locally on desktop

Hugging Face → to download the checkpoints

LM Studio, Kaggle, or Docker → ready-to-use environments for experimentation

How to run it on your phone?

Keep reading with a 7-day free trial

Subscribe to Exploring Artificial Intelligence to keep reading this post and get 7 days of free access to the full post archives.